- 13 Posts

- 77 Comments

57·6 months ago

57·6 months agoBefore y’all get excited, the press release doesn’t actually mention the term “open source” anywhere.

Winamp will open up its code for the player used on Windows, enabling the entire community to participate in its development. This is an invitation to global collaboration, where developers worldwide can contribute their expertise, ideas, and passion to help this iconic software evolve.

This, to me, reads like it’s going to be a “source available” model, perhaps released under some sort of a Contributor License Agreement (CLA). So, best to hold off any celebrations until we see the actual license.

Nice, great to see the continued development of an old-school, lightweight browser. We need more active alternatives to the bloated duopoly.

51·6 months ago

51·6 months agoSince you’re on Linux, it’s just a matter of installing the right packages from your distros package manager. Lots of articles on the Web, just google your app + “ROCm”. Main thing you gotta keep in mind is the version dependencies, since ROCm 6.0/6.1 was released recently, some programs may not yet have been updated for it. So if your distro packages the most recent version, your app might not yet support it.

This is why many ML apps also come as a Docker image with specific versions of libraries bundled with them - so that could be an easier option for you, instead of manually hunting around for various package dependencies.

Also, chances are that your app may not even know/care about ROCm, if it just uses a library like PyTorch / TensorFlow etc. So just check it’s requirements first.

As for AMD vs nVidia in general, there are a few places mainly where they lagged behind: RTX, compute and super sampling.

-

For RTX, there has been improvements in performance with the RDNA3 cards, but it does lag behind by a generation. For instance, the latest 7900 XTX’s RTX performance is equivalent to the 3080.

-

Compute is catching up as I mentioned earlier, and in some cases the performance may even match nVidia. This is very application/library specific though, so you’ll need to look it up.

-

Super Sampling is a bit of a weird one. AMD has FSR and it does a good job in general. In some cases, it may even perform better since it uses much simpler calculations, as opposed to nVidia’s deep learning technique. And AMD’s FSR method can be used with any card in fact, as long as the game supports it. And therein lies the catch, only something like 1/3rd of the games out there support it, and even fewer games support the latest FSR 3. But there are mods out there which can enable FSR (check Nexus Mods) that you might be able to use. In any case, FSR/DLSS isn’t a critical thing, unless you’re gaming on a 4K+ monitor.

You can check out Tom’s Hardware GPU Hierarchy for the exact numbers - scroll down halfway to read about the RTX and FSR situation.

So yes, AMD does lag behind in nVidia but whether this impacts you really depends on your needs and use cases. If you’re a Linux user though, getting an AMD is a no-brainer - it just works so much better, as in, no need to deal with proprietary driver headaches, no update woes, excellent Wayland support etc.

-

11·6 months ago

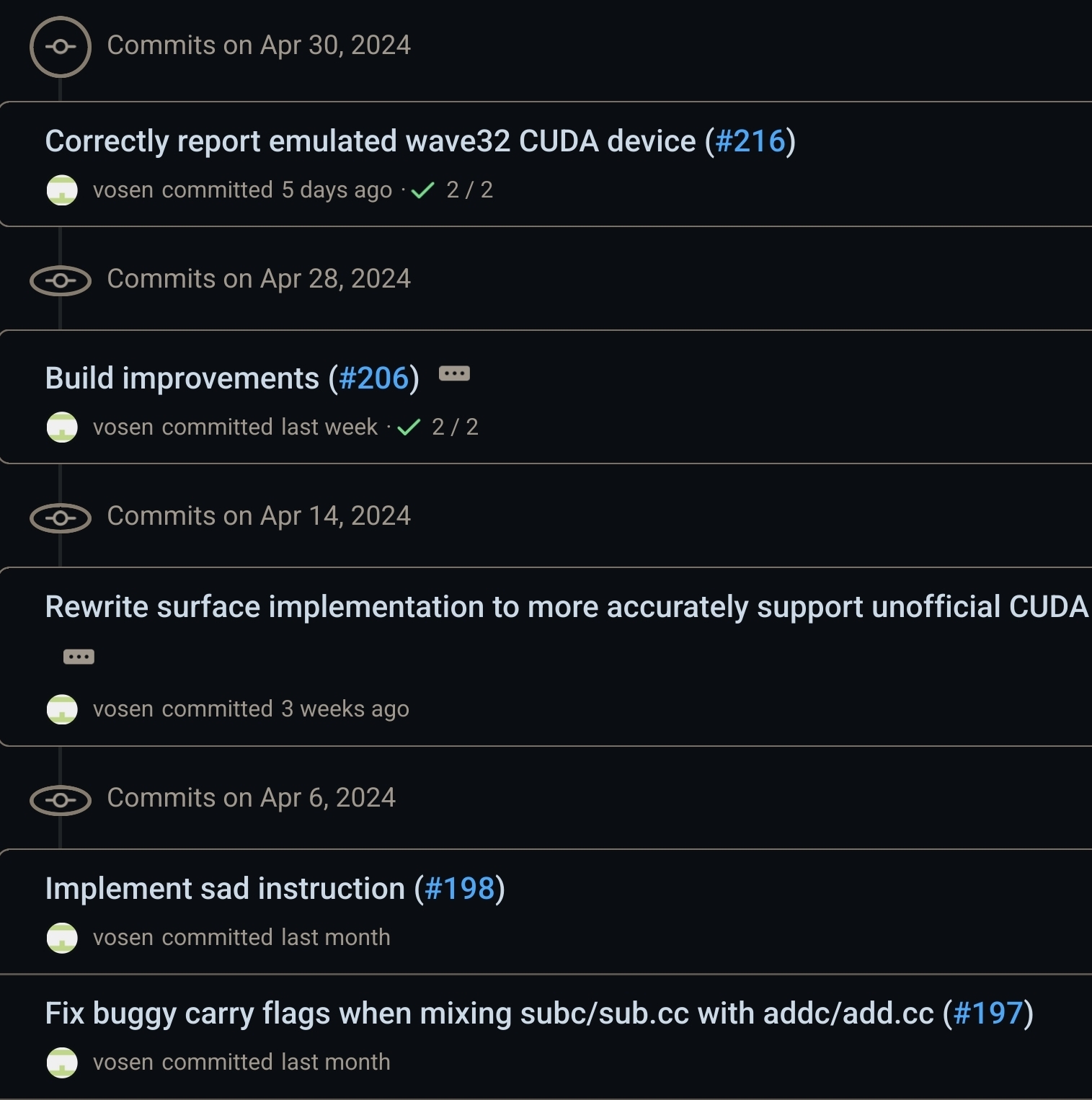

11·6 months agoI based my statements on the actual commits being made to the repo, from what I can see it’s certainly not “floundering”:

In any case, ZLUDA is really just a stop-gap arrangement so I don’t see it being an issue either way - with more and more projects supporting AMD cards, it won’t be needed at all in the near future.

31·6 months ago

31·6 months agoIt’s not “optimistic”, it’s actually happening. Don’t forget that GPU compute is a pretty vast field, and not every field/application has a hard-coded dependency on CUDA/nVidia.

For instance, both TensorFlow and PyTorch work fine with ROCm 6.0+ now, and this enables a lot of ML tasks such as running LLMs like Llama2. Stable Diffusion also works fine - I’ve tested 2.1 a while back and performance has been great on my Arch + 7800 XT setup. There’s plenty more such examples where AMD is already a viable option. And don’t forget ZLUDA too, which is being continuing to be improved.

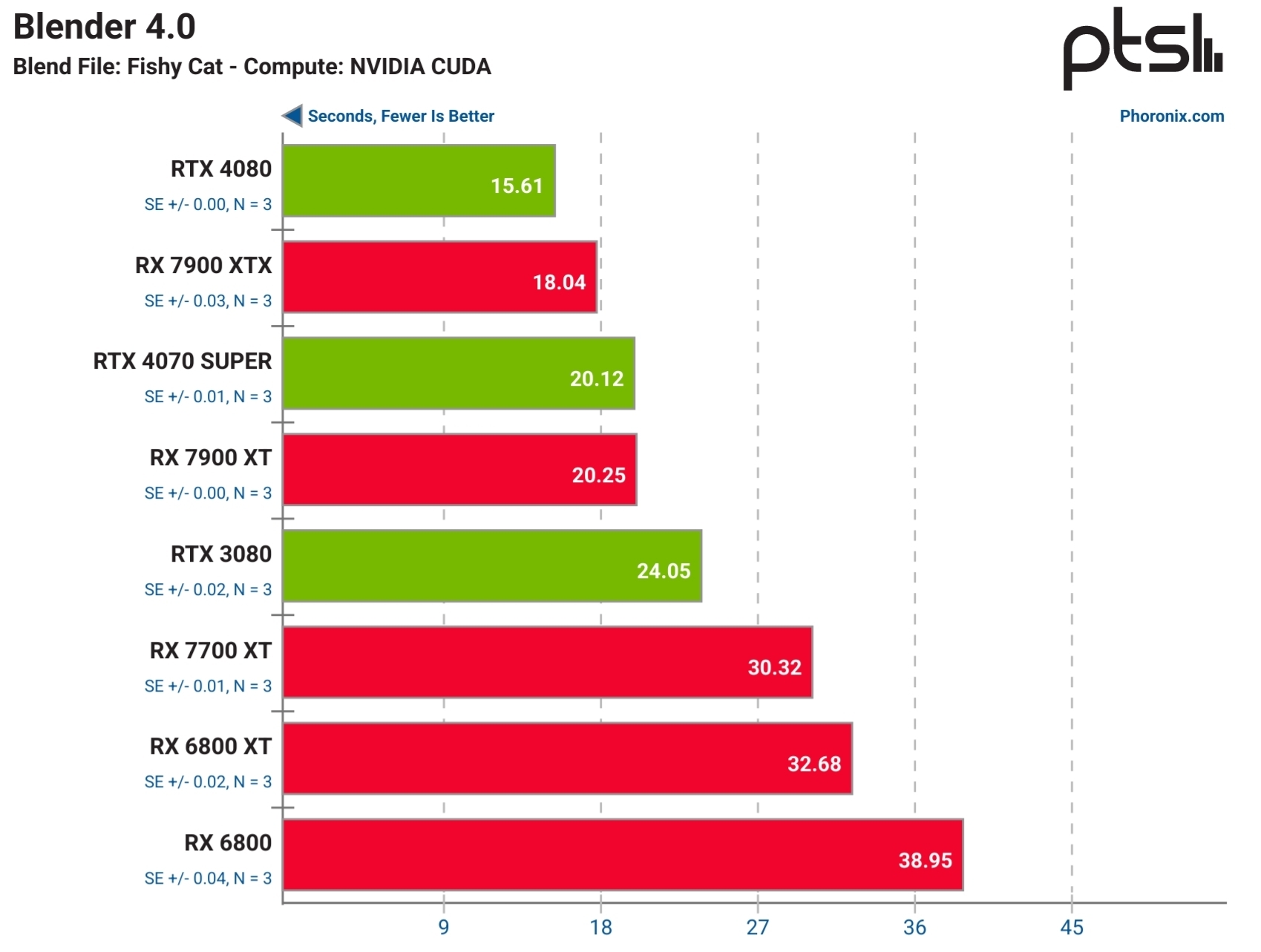

I mean, look at this benchmark from Feb, that’s not bad at all:

And ZLUDA has had many improvements since then, so this will only get better.

Of course, whether all this makes an actual dent in nVidia compute market share is a completely different story (thanks to enterprise $$$ + existing hw that’s already out there), but the point is, at least for many people/projects - ROCm is already a viable alternative to CUDA for many scenarios. And this will only improve with time. Just within the last 6 months for instance there have been VAST improvements in both ROCm (like the 6.0 release) and compatibility with major projects (like PyTorch). 6.1 was released only a few weeks ago with improved SD performance, a new video decode component (rocDecode), much faster matrix calculations with the new EigenSolver etc. It’s a very exiting space to be in to be honest.

So you’d have to be blind to not notice these rapid changes that’s really happening. And yes, right now it’s still very, very early days for AMD and they’ve got a lot of catching up to do, and there’s a lot of scope for improvement too. But it’s happening for sure, AMD + the community isn’t sitting idle.

12·6 months ago

12·6 months agoThe best option is to just support the developer/project by the method they prefer the most (ko-fi/patreon/crypto/beer/t-shirts etc).

If the project doesn’t accept any donations but accepts code contributions instead (or you want to develop something that doesn’t exist), you can directly hire a freelancer to work on what you want, from sites like freelancer.com.

I personally use a ThinkPad Z13 (all AMD; it’s nice but pricey), but I’d recommend getting a Framework (which wasn’t an option for me back then). I think modular and repairable laptops are cool, plus they seem to be well supported by the Linux community.

Kvaesitso. Opensource, minimal, search-based launcher. My only complaint is that it’s not optimised for foldables, otherwise it’s a great launcher.

Thanks! I did that, but it doesn’t get seem to get rid of them from “trending”.

Anyone here know how to disable shorts? I could’ve sworn there was an option to disable it previously, but I can’t find it any more.

3·7 months ago

3·7 months agoThey are actually pretty decent though? At least all the ones since Zen 3+ (Radeon 680M, 780M etc)

I have the previous model, the UM780 XTX (same iGPU - Radeon 780M) and it’s been very decent for 1080p gaming (medium-high, depending on the game). Even 1440p is playable depending on your game/settings. Cyberpunk 2077 for instance runs perfectly at 70FPS on 1440p low, which is incredible if you think about how this game caused so much trouble when it first came out.

I also have a ThinkPad with a Zen3+ APU (Radeon 680M), and it can run Forza Horizon 4 at Ultra settings and 60FPS locked.

On both these machines, I game on Linux (Bazzite and Arch), so it’s pretty awesome that I can run Windows games and get so much performance with cheap hardware, and using open-source drivers.

So yeah, these are some really great times for APU / mini PC / AMD / Linux fans.

if any one of my Windows or Android units got stolen and somehow cracked into or something.

This shouldn’t be a concern if you’re using disk encryption and secure passwords, which is generally the default behaviour on most systems these days.

On Android, you don’t need to worry about anything as long as you’ve got a pin/password configured, as disk encryption has been enabled by default for like a decade now.

On Windows, if you’re on the Pro/Enterprise edition, you can use Bitlocker, but if you’re on Home, you can use “device encryption” (which is like a lightweight Bitlocker) - but that requires a TPM chip and your Windows user account linked to a Microsoft account. If that is not an option, you could use VeraCrypt instead, which is an opensource disk encryption tool. Another option, if you’re on a laptop, could be Opal encryption (aka TCG Opal SED), assuming your drive/BIOS supports it.

TL;DR: Encrypt yo’ shit, and you don’t need to worry about your data if your device gets stolen.

14·7 months ago

14·7 months agoActually this is probably a good time for you to get into Rust, since everyone is still at the starting point (ish), and you, unlike others, don’t have decades of C/C++ habits ingrained in you. So if you’re starting off fresh, Rust is not a bad language to dip your toes into. :)

281·7 months ago

281·7 months agoIt’s easiest to just register a domain name and use Couldflare Tunnels. No need to worry about dynamic DNS, port forwarding etc. Plus, you have the security advantages of DDoS protection and firewall (WAF). Finally, you get portability - you can change your ISP, router or even move your entire lab into the cloud if you wanted to, and you won’t need to change a single thing.

I have a lab set up on my mini PC that I often take to work with me, and it works the same regardless of whether it’s going thru my work’s restricted proxy or the NAT at home. Zero config required on the network side.

35·7 months ago

35·7 months agohttps://www.freewear.org/ - They support several opensource projects, so there’s a good chance your favorite project is also there.

Same with https://www.hellotux.com/ - but unlike others, they embroider their logos instead of just printing them - as a result, they look “official” and last longer.

Discordo is a completely open-source frontend, and it’s a native TUI app written 100% in Go - no Electron/HTML/Javascript crap.

If you want a GUI app and something not so barebones, there’s Dissent - a GTK4 app, also written in Go, with no Electron/HTML/Javascript crap.

And if you’re a Qt fan, there’s also QTCord, written in Python.

cc: @powermaker450@discuss.tchncs.de

This shouldn’t even be a question lol. Even if you aren’t worried about theft, encryption has a nice bonus: you don’t have to worry about secure erasing your drives when you want to get rid of them. I mean, sure it’s not that big of a deal to wipe a drive, but sometimes you’re unable to do so - for instance, the drive could fail and you may not be able to do the wipe. So you end up getting rid of the drive as-is, but an opportunist could get a hold of that drive and attempt to repair it and recover your data. Or maybe the drive fails, but it’s still under warranty and you want to RMA it - with encryption on, you don’t have to worry about some random accessing your data.

13·7 months ago

13·7 months agoThat may be fine for ordinary gadgets, but many people wear their smartwatch at night for sleep quality and HRV tracking. With my Garmin for instance, I usually wear it almost all week for continuous health tracking, and only take it off for a short while on the weekend for charging. It would really suck going from that, to having to charge my watch every day.

It you use Affinity on Windows, you may be interested in this: https://github.com/lf-/affinity-crimes

I’ve heard of Intel Arc users for instance not able to play certain games because it checks for AMD/nVidia, so you’d have to fake the GPU vendor to get it to work.

Eg see stuff like this: https://www.phoronix.com/news/Intel-Graphics-Hogwarts-Legacy

Or https://www.phoronix.com/news/The-Finals-Intel-Arc-Graphics