What advantage does EGS have? Epic levels of anti-consumer sentiment? Horrific customer service? Biggest piece of shit company in the gaming industry?

Father, Hacker (Information Security Professional), Open Source Software Developer, Inventor, and 3D printing enthusiast

What advantage does EGS have? Epic levels of anti-consumer sentiment? Horrific customer service? Biggest piece of shit company in the gaming industry?

Chess. It’s over 1500 years old!

As someone who’s caught a leaker in the past (well, someone that was exfiltrating company secrets to a competitor) catching leakers is actually pretty easy if you have any modicum of control over the tools they use and the places they work. Barring that, no. Just no. It’s not going to happen.

If a leaker is gullible and stupid some trickery is possible but I wouldn’t get my hopes up, Warner Music. Seems like a job that’s doomed to fail from the start. I wouldn’t even bother unless they know it’s just a job on paper and are actually just looking to give someone’s kid a legit-sounding job to pad their resume 🤷

Not only that but if I were in charge of hiring I’d be extremely skeptical of any and all applicants. Anyone smart enough to do the job will know it’s impossible and will just become a master of stalling and picking low hanging fruit (aka useless) and everyone else is just a fraud.

Speed and memory efficiency, mostly. If you ever have to grep for something in a large number of files ripgrep will be done while regular grep will only be reaching the 25% mark.

The modern man uses ripgrep 👍

Don’t give up hope! We still have a long way to go in terms of optimal athlete squeezing 👍

Meh. She seems way too focused.

The guy looks like he does this every day, constantly. Brushing his teeth? He fires the gun. Tying his shoes? He fires the gun. Wiping his ass? He fires the gun.

Firing the gun is just something he does. He does it a lot. Then one day the Olympics shooting coach showed up and decided to put him out there on behalf of Turkey.

It’s from the actual article. I know, I know! Totally not necessary to read it, right? 🤣

You’re just explaining basic Android functionality using random apps of your choosing.

You’re not wrong… but is that attitude really necessary? It comes off as, “You’re just explaining basic shit any idiot would know, loser! 😝”

Besides, not all apps that load external URLs are like that: A lot of them will use Android Web View which annoys TF out of me.

You want people to move to your rural town in the age of people working from home? Invest in fantastic high speed Internet. If the houses are cheap and the nearest grocery store isn’t an hour away you’ll get all sorts of very smart, highly-skilled people moving there.

Unless there’s Confederate flags and MAGA signs everywhere. Then those people will stay the fuck away.

If you can put up a pride flag at town hall and keep it up year-round without a local political firestorm you might stand a chance 🤷

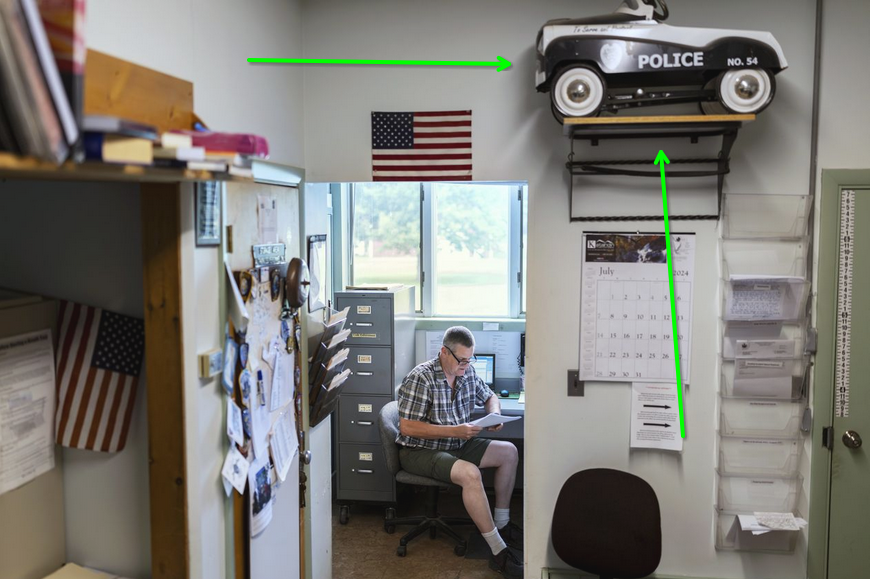

You know the town budget is getting tight when this is your town’s police car:

I mean, as long as the guy holds up a flashing blue light and shouts police siren sounds really loud it just might work 🤷

If you install Firefox Focus and make it your default browser on Android the Jerboa client (and others I think) will use it when loading links unless you have a specific app associated with a given URL (e.g. NYT app, NPR app, etc).

If you’re not familiar with Firefox Focus it’s a version of Firefox built for privacy. It basically makes it so that every URL you load behaves like a private browser tab. It also has ad-blocking built in which is sweet (though it doesn’t work on everything/not as good as uBlock Origin).

Oops: Just realized your question is related to Mastodon and not Lemmy. Though I’m certain that Firefox Focus would work the same way for Mastodon clients.

Actually, I just checked Tusky and yes, it does load URLs in Firefox Focus. So my advice is still good 👍

Options:

This is a, “it’s turtles all the way down!” problem. An application has to be able to store its encryption keys somewhere. You can encrypt your encryption keys but then where do you store that key? Ultimately any application will need access to the plaintext key in order to function.

On servers the best practice is to store the encryption keys somewhere that isn’t on the server itself. Such as a networked Hardware Security Module (HSM) but literally any location that isn’t physically on/in the server itself is good enough. Some Raspberry Pi attached to the network in the corner of the data center would be nearly as good because the attack you’re protecting against with this kind of encryption is someone walking out of the data center with your server (and then decrypting the data).

With a device like a phone you can’t use a networked HSM since your phone will be carried around with you everywhere. You could store your encryption keys out on the Internet somewhere but that actually increases the attack surface. As such, the encryption keys get stored on the phone itself.

Phone OSes include tools like encrypted storage locations for things like encryption keys but realistically they’re no more secure than storing the keys as plaintext in the application’s app-specific store (which is encrypted on Android by default; not sure about iOS). Only that app and the OS itself have access to that storage location so it’s basically exactly the same as the special “secure” storage features… Except easier to use and less likely to be targeted, exploited, and ultimately compromised because again, it’s a smaller attack surface.

If an attacker gets physical access to your device you must assume they’ll have access to everything on it unless the data is encrypted and the key for that isn’t on the phone itself (e.g. it uses a hash generated from your thumbprint or your PIN). In that case your effective encryption key is your thumb(s) and/or PIN. Because the Signal app’s encryption keys are already encrypted on the filesystem.

Going full circle: You can always further encrypt something or add an extra step to accessing encrypted data but that just adds inconvenience and doesn’t really buy you any more security (realistically). It’s turtles all the way down.

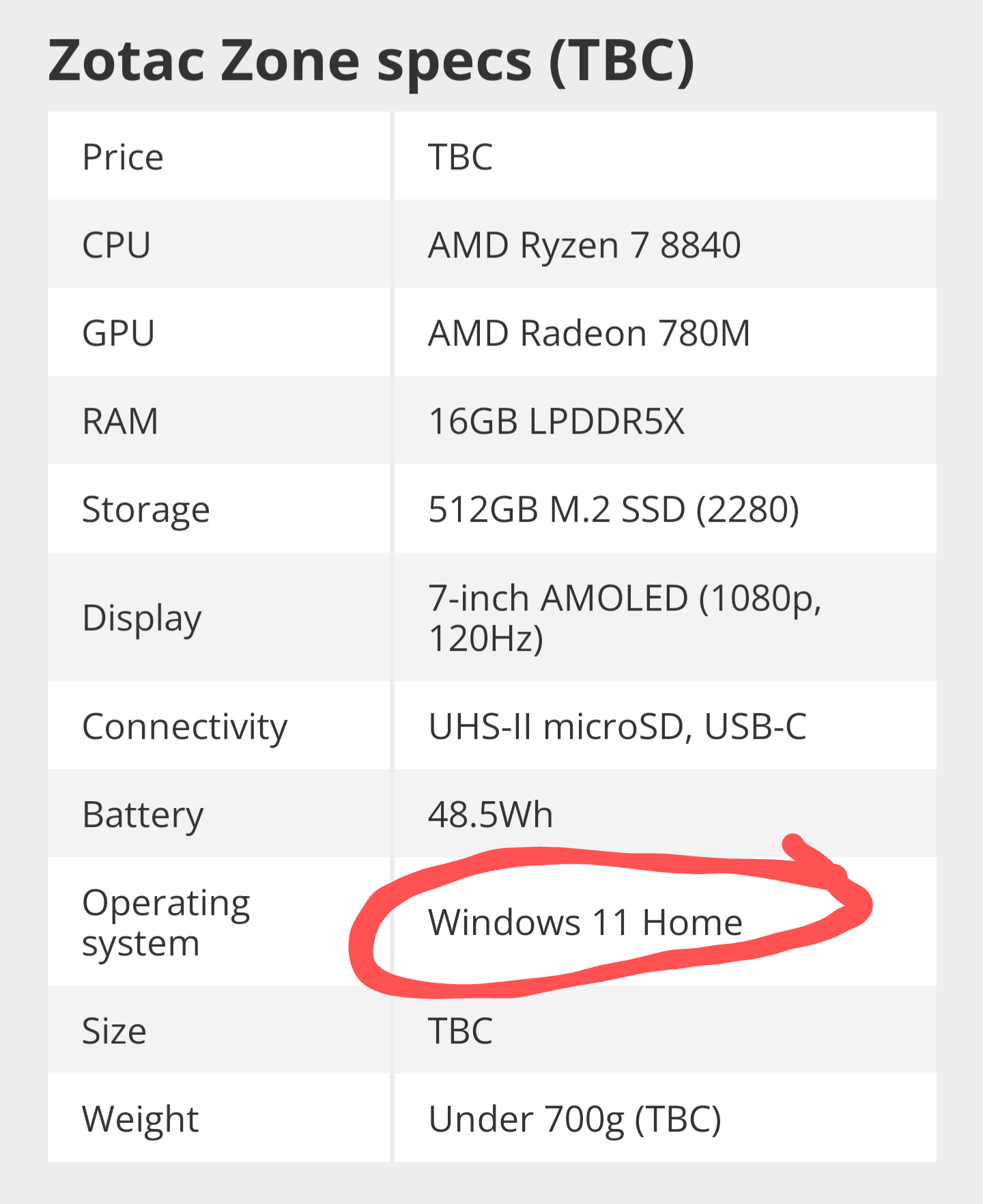

Just need the Stable Diffusion benchmarks.

It’s more that they got sweaty and uncomfortable. Kids need to move around, ya know?

To be fair, carrying a child on your back can be fun for MAX an hour at a time. So maybe perfect for a video game 🤔

I used to carry my kids in a kid backpack for hikes on the weekends and they loved it. Too much, actually. In the Florida heat that’s a good way to sweat your ass off.

Can’t say the thing missing from those fun hikes through the arboretum was fashion 🙄

It’s garbage.

You’re thinking that every Android device is reasonably new. This is not the case.

There’s devices running Android from >12 years ago that can’t get apps any other way than F-Droid because Google Play Services no longer work.

As expected, nobody cares about “reader mode”. Only once in my life has it ever come in handy… It was a website that was so badly designed I swore never to go back to it ever again.

I forget what it was but apparently I wasn’t the only one and thus, it must’ve died a fast death as I haven’t seen it ever again (otherwise I’d remember).

Basically, any website that gets users so frustrated that they resort to reader/simplified mode isn’t going to last very long. If I had my way I would change the messages:

“This website appears to be total shit. Do you want Firefox to try to fix it so your eyes don’t bleed trying to get through it?”

I want an extension that does this, actually! It doesn’t need to actually modify the page. Just give me a virtual assistant to comiserate with…

“The people who made this website should have their browser’s back button removed entirely as punishment for erecting this horror!”